Diving into Docker: Exploring Architecture and Why It Excels Beyond Virtualization - Day 16

In the realm of modern software development and deployment, Docker has emerged as a transformative tool, revolutionizing the way we build, ship, and run applications. But what exactly is Docker, and how does it differ from traditional virtualization? Let's embark on a journey to uncover the basics of Docker, its architecture, and how it compares to virtualization.

What is Docker?

At its core, Docker is a platform that simplifies the process of packaging, deploying, and managing applications within lightweight, portable containers. These containers encapsulate everything an application needs to run, including code, runtime, system tools, libraries, and dependencies. Docker ensures consistency across different environments, making it easier to develop, test, and deploy software seamlessly.

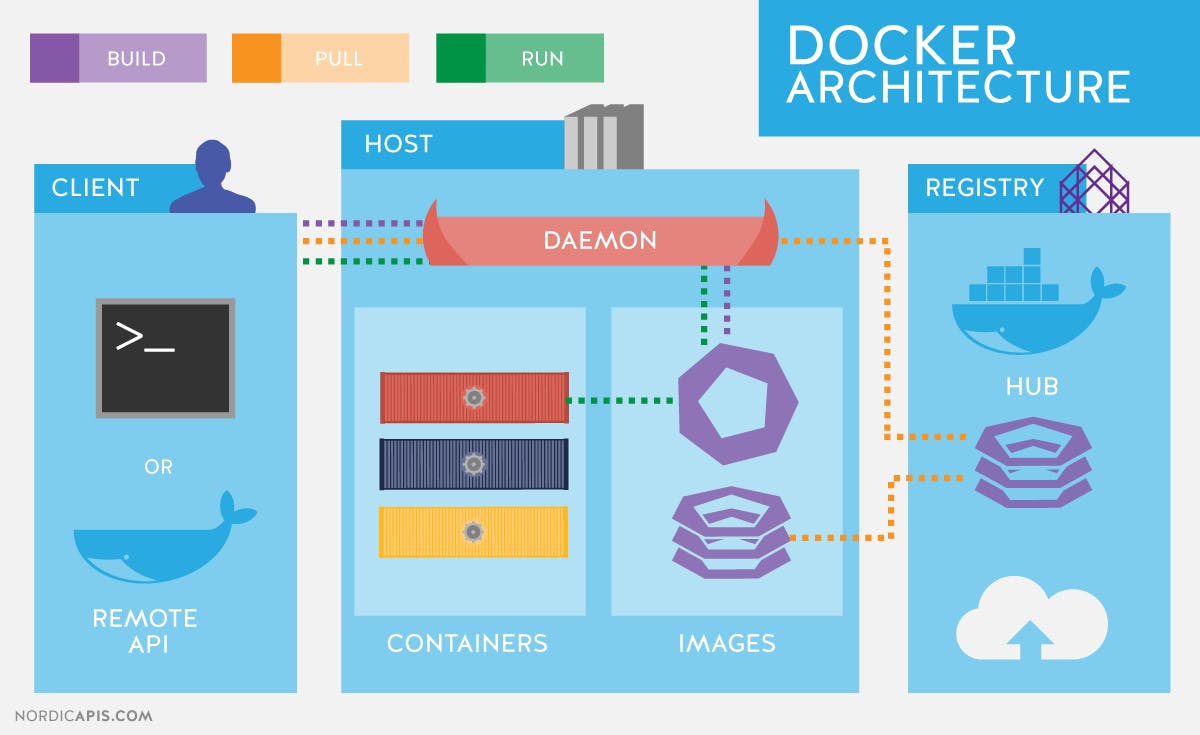

Docker Architecture

Docker's architecture is designed to be modular and flexible, comprising several components that work together to enable containerization. At its core, Docker relies on a client-server architecture, with the Docker client interacting with the Docker daemon (also known as the Docker Engine) to manage containers and other Docker objects.

Components of Docker Architecture:

Docker Client:

The Docker client is a command-line tool (CLI) or a graphical user interface (GUI) that users interact with to send commands to the Docker daemon.

It allows users to build, run, and manage Docker containers, images, networks, volumes, and other Docker objects.

Docker Daemon (Docker Engine):

The Docker daemon, or Docker Engine, is a background service that runs on the host system. It's responsible for managing Docker objects and handling container runtime operations.

The daemon listens for Docker API requests from the Docker client and executes them, managing container lifecycle, networking, storage, and more.

Containerization Technology:

At the heart of Docker's architecture is its containerization technology, which allows applications and their dependencies to be packaged into lightweight, portable containers.

Containers share the host operating system's kernel but are isolated processes with their own filesystem, network, and process space.

Docker Registry:

Docker Registry is a storage and distribution service that stores Docker images. It serves as a central repository for sharing and distributing Docker images across different environments.

Docker Hub is a public registry hosted by Docker, where users can find, pull, and push Docker images. Organizations often set up private Docker registries for internal use and security reasons.

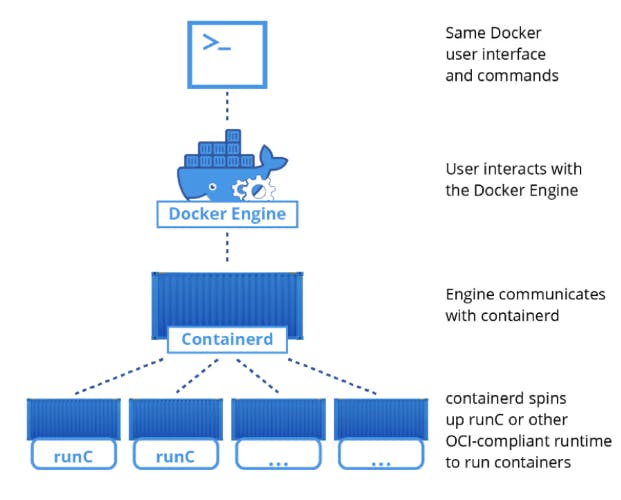

Containerd and Runc:

Containerd is an industry-standard container runtime that manages the container lifecycle, including container creation, execution, and destruction. It serves as the core container runtime within Docker.

Runc is a lightweight, portable container runtime used by Containerd to execute containers according to the Open Container Initiative (OCI) specification. It provides low-level functionality for running containers securely.

How Docker Works:

When a user interacts with the Docker client, commands are sent to the Docker daemon via the Docker API. The Docker daemon then processes these commands, interacts with the container runtime (Containerd and Runc), and manages container lifecycle operations, such as creating, starting, stopping, and deleting containers.

Docker images are retrieved from Docker registries (such as Docker Hub) or local repositories, where they are stored. These images serve as blueprints for creating containers, containing all the necessary dependencies and configuration required to run an application.

Containers are instantiated from Docker images, each running as isolated processes on the host system. Docker abstracts away the underlying infrastructure complexity, allowing users to focus on developing and deploying applications without worrying about environment inconsistencies.

Docker's architecture simplifies the process of building, shipping, and running applications by leveraging containerization technology. By understanding Docker's components and how they interact, users can effectively manage containers, streamline development workflows, and achieve greater consistency and efficiency in software delivery.

With Docker's modular and scalable architecture, organizations can embrace containerization with confidence, empowering teams to innovate and deliver high-quality software at scale.

Sure, let's explore virtualization architecture in simpler terms.

Virtualization Architecture

Virtualization is a technology that enables the creation of multiple virtual instances of computer hardware (including servers, storage devices, and networks) within a single physical machine. These virtual instances, known as virtual machines (VMs), operate independently of each other and share the resources of the underlying physical hardware.

Components of Virtualization Architecture:

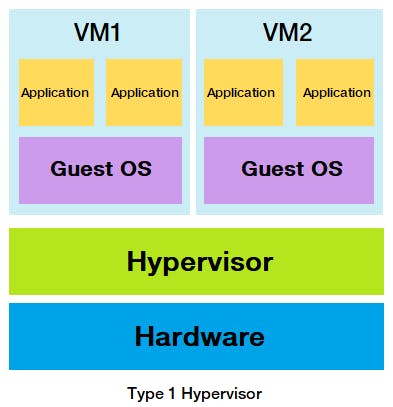

Hypervisor (Virtual Machine Monitor - VMM):

The hypervisor is a software layer that sits between the physical hardware and the virtual machines. It manages and allocates physical hardware resources to multiple VMs.

There are two types of hypervisors:

Type 1 Hypervisor (Bare-Metal): Runs directly on the physical hardware without the need for a host operating system. Examples include VMware ESXi and Microsoft Hyper-V.

Type 2 Hypervisor (Hosted): Runs on top of a host operating system. Users interact with the hypervisor through an application installed on the host OS. Examples include VMware Workstation and Oracle VirtualBox.

Virtual Machine (VM):

A virtual machine is an isolated instance of a guest operating system (OS) running on top of a hypervisor. Each VM behaves like a physical computer with its own CPU, memory, storage, and network interfaces.

Multiple VMs can run concurrently on the same physical hardware, allowing for efficient resource utilization and workload isolation.

Guest Operating System:

- Each VM runs its own guest operating system, which can be different from the host operating system. The guest OS interacts with the hardware through the hypervisor, unaware that it's running in a virtualized environment.

Physical Hardware:

- The physical hardware refers to the underlying computing infrastructure on which the hypervisor and VMs operate. This includes the CPU, memory, storage devices, and network interfaces of the host machine.

Virtual Machine Manager (VMM):

The Virtual Machine Manager (VMM) is a management tool that allows administrators to create, configure, monitor, and manage virtual machines and their resources.

VMMs provide features such as VM provisioning, resource allocation, performance monitoring, and security management.

How Virtualization Works:

When a hypervisor is installed on a physical server, it partitions the hardware resources into multiple virtual environments, each running its own VM. The hypervisor abstracts the underlying hardware, allowing multiple VMs to share physical resources without interference.

Each VM operates independently, running its own operating system and applications. From the perspective of the VM, it has exclusive access to the allocated hardware resources, unaware of the presence of other VMs sharing the same physical machine.

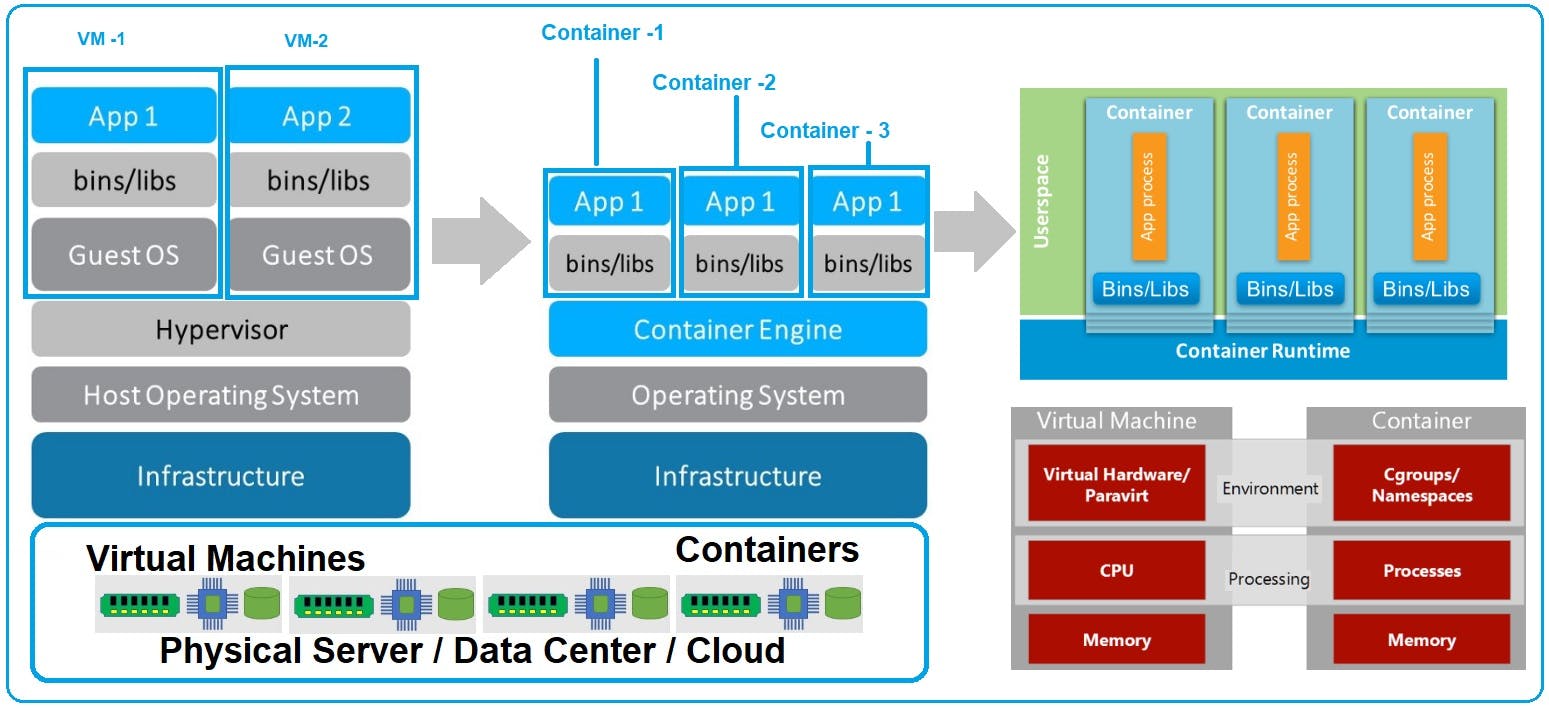

Docker vs. Virtualization Architecture Comparison:

Let's compare Docker and virtualization architectures and discuss why Docker is preferred in many scenarios

1. Resource Utilization:

Virtualization: Virtual machines (VMs) emulate entire operating systems, leading to significant resource overhead. Each VM requires its own set of resources, including memory, storage, and CPU.

Docker: Docker containers share the host system's kernel and resources, resulting in minimal overhead. Containers are lightweight and start much faster compared to VMs.

2. Isolation:

Virtualization: VMs provide strong isolation by running multiple operating systems on a single physical machine. Each VM has its own kernel and complete OS instance.

Docker: Containers offer process-level isolation, meaning they share the host OS kernel but run as isolated processes. While not as strong as VM isolation, containers provide sufficient isolation for most applications with minimal resource overhead.

3. Architecture:

Virtualization: Virtualization relies on a hypervisor to manage and allocate hardware resources to multiple VMs. There are two types of hypervisors: Type 1 (bare-metal) and Type 2 (hosted).

Docker: Docker uses a client-server architecture with the Docker engine managing containers and the Docker CLI providing a user-friendly interface. Containers run on top of a container runtime, such as Containerd.

4. Start Time:

Virtualization: VMs typically take longer to start due to the need to boot an entire operating system.

Docker: Containers start almost instantly since they leverage the host system's kernel and only need to initialize the application process.

5. Portability:

Virtualization: VMs are less portable due to their larger footprint and dependency on specific hypervisors.

Docker: Docker containers are highly portable, thanks to their lightweight nature and standardized format. They can run on any system with Docker installed, simplifying application deployment across different environments.

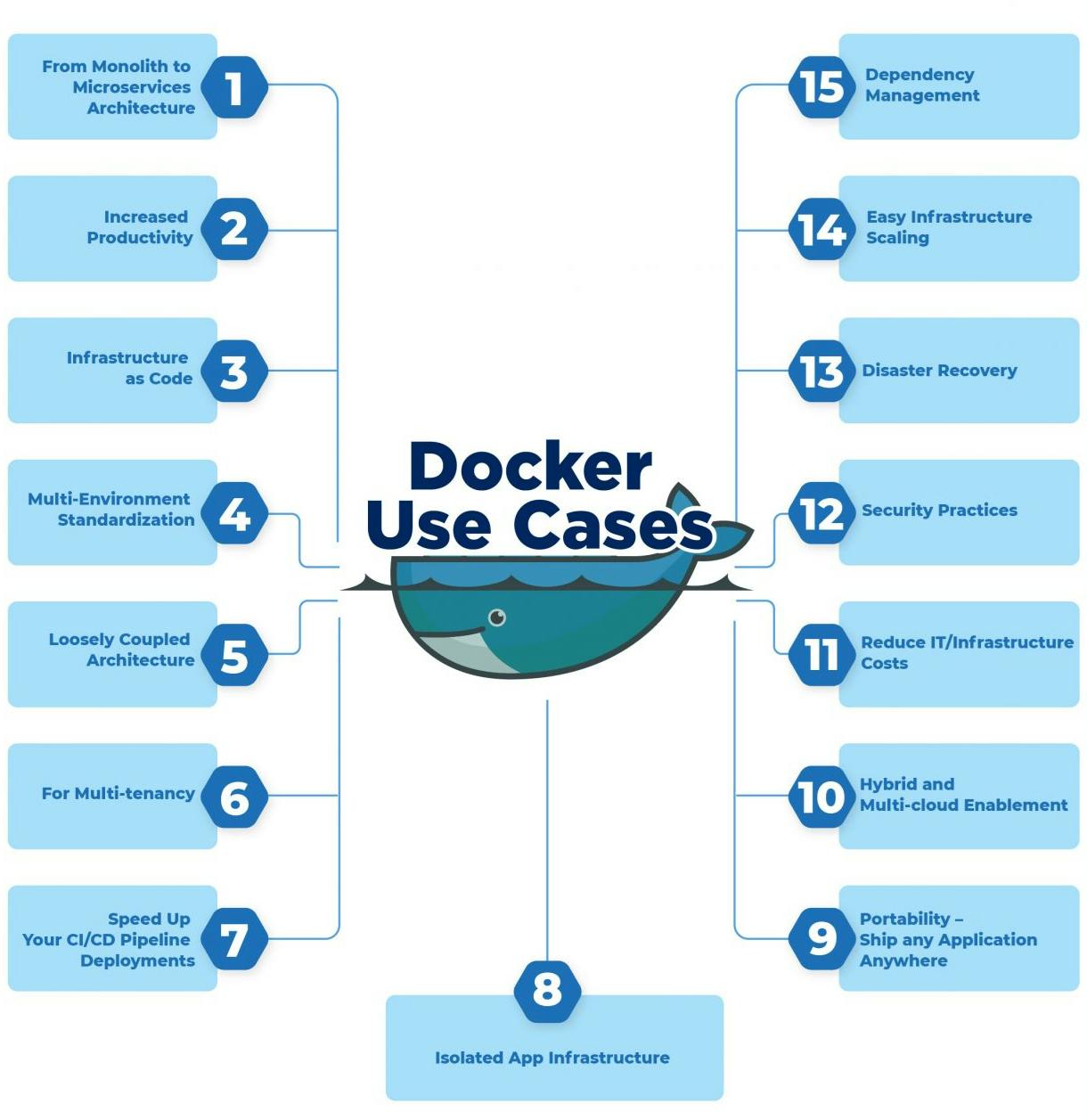

Why Docker is Preferred:

Efficiency: Docker containers are lightweight and share resources, resulting in efficient resource utilization and faster application startup times compared to virtual machines.

Portability: Docker containers are highly portable, making it easy to move applications between different environments, from development to production, without worrying about compatibility issues.

Consistency: Docker ensures consistency between development, testing, and production environments, reducing the "works on my machine" problem and making it easier to maintain and troubleshoot applications.

Scalability: Docker's lightweight architecture and support for container orchestration platforms like Kubernetes make it easy to scale applications horizontally and vertically to meet changing workload demands.

DevOps Integration: Docker integrates seamlessly with DevOps tools and practices, facilitating continuous integration, continuous deployment (CI/CD), and automation workflows.

Resource Efficiency: Docker's containerization approach allows for better utilization of hardware resources compared to traditional virtualization, resulting in cost savings and improved infrastructure efficiency.

Conclusion:

While both Docker and virtualization offer benefits in terms of resource isolation, portability, and scalability, Docker's lightweight architecture, efficient resource utilization, and ease of management make it a preferred choice for many modern application deployment scenarios. By leveraging Docker's capabilities, organizations can streamline their development and deployment workflows, achieve greater agility, and accelerate time-to-market for their applications.