Introduction:

Kubernetes, often referred to as K8s, stands as a pivotal technology in the realm of container orchestration, revolutionizing how modern applications are deployed and managed. From its humble beginnings rooted in Google's internal system 'Borg' to its transformation into an open-source platform, Kubernetes has become the cornerstone of cloud-native computing. In this comprehensive guide, we will delve into the intricacies of Kubernetes architecture, its core components, and the fundamental concepts underpinning its operation.

Understanding Kubernetes Essentials:

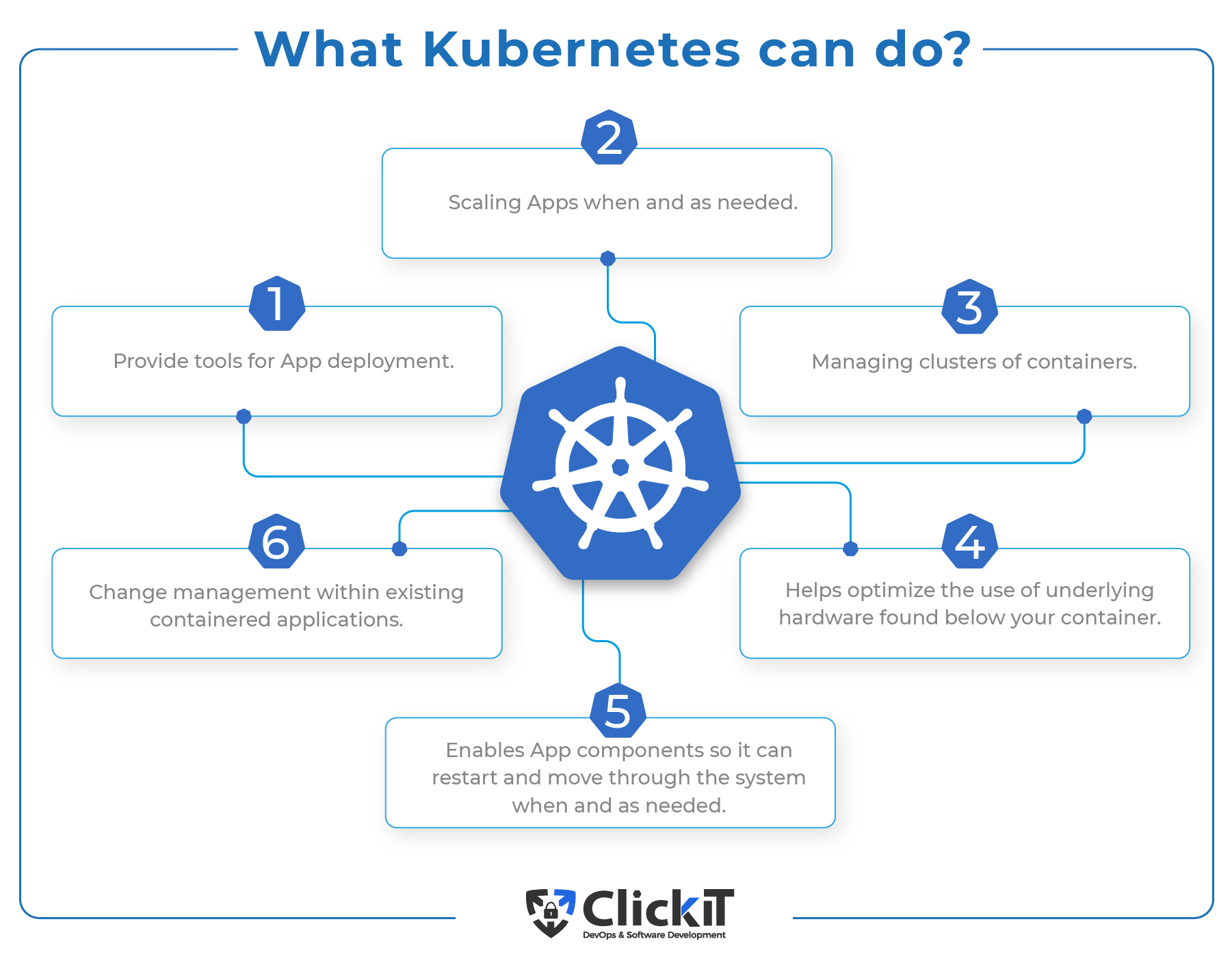

Kubernetes serves as an open-source container management tool, automating container deployment, scaling, and load balancing across diverse environments. Its key features include:

Orchestration: Efficiently manages clusters of containers across various networks.

Autoscaling: Dynamically adjusts resources to handle varying workloads.

Auto Healing: Automatically replaces failed containers to ensure application reliability.

Load Balancing: Distributes incoming traffic across multiple container instances.

Platform Independence: Runs seamlessly on cloud, virtual, and physical environments.

Fault Tolerance: Maintains application availability in the face of node or pod failures.

Rollback: Facilitates reverting to previous application versions if needed.

Health Monitoring: Monitors container health to detect and mitigate issues.

Batch Execution: Supports various execution modes, including one-time, sequential, and parallel.

Deciphering Kubernetes Architecture:

Master Node and Worker Node in Kubernetes:

Understanding Their Roles

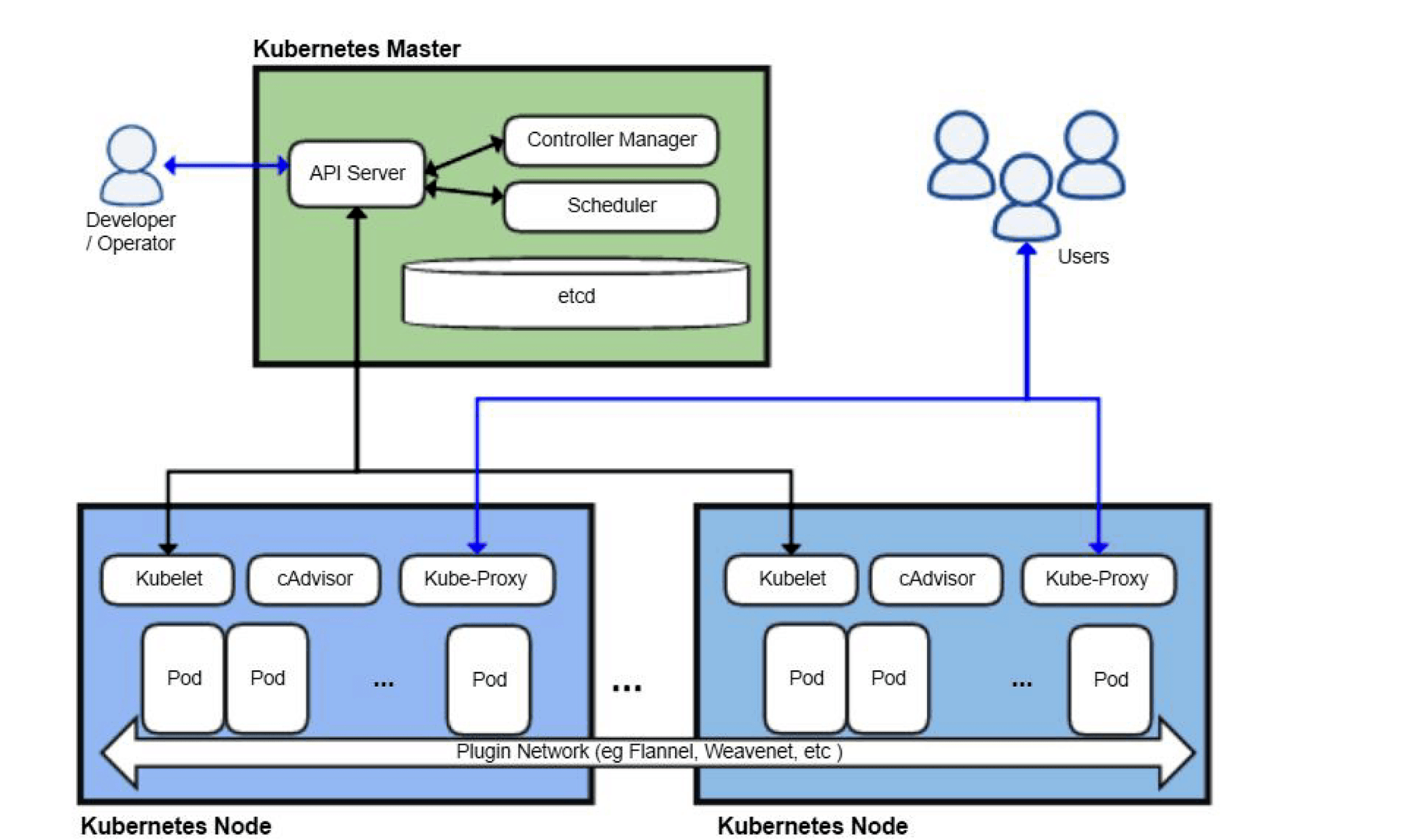

In the realm of Kubernetes architecture, the master node and worker node play distinct yet complementary roles in orchestrating containerized applications. Let's delve deeper into the functions and responsibilities of each node:

Master Node:

The master node serves as the control plane of the Kubernetes cluster, orchestrating and managing various aspects of containerized applications. Here are the key components hosted on the master node:

kube-apiserver:

Acts as the front-end for the Kubernetes control plane, exposing the Kubernetes API.

Handles API requests from users, such as deploying applications, scaling resources, and querying cluster state.

etcd:

Functions as a distributed key-value store, storing configuration data essential for cluster operation.

Maintains the desired state of the cluster, ensuring consistency and fault tolerance.

kube-scheduler:

Assigns pods to worker nodes based on resource requirements, node capacity, and other constraints.

Ensures optimal resource utilization and load distribution across the cluster.

kube-controller-manager:

Encompasses various controllers responsible for maintaining the desired state of the cluster.

Monitors cluster resources, handles node failures, manages replication, and orchestrates rolling updates.

The master node serves as the brain of the Kubernetes cluster, overseeing the overall operation and coordination of containerized workloads.

Worker Node:

The worker node, also known as a minion node, is responsible for executing the tasks assigned by the master node and hosting the application workloads. Here's a breakdown of the components running on a worker node:

kubelet:

Acts as the primary node agent, responsible for communicating with the master node and managing containers.

Receives instructions from the master node to create, destroy, or update pods and containers as needed.

kube-proxy:

Manages network routing for services running on the node, enabling communication between pods and external clients.

Implements network rules defined by Kubernetes services, such as load balancing and routing.

Container Runtime:

Software responsible for running containers, such as Docker, containerd, or cri-o.

Executes container images, manages container lifecycle, and provides isolation and resource allocation.

The worker node serves as the execution environment for containerized applications, running multiple pods and containers to fulfill the desired workload.

Collaboration Between Master and Worker Nodes:

The master node and worker nodes collaborate to ensure the smooth operation of the Kubernetes cluster. The master node dictates the desired state of the cluster, while the worker nodes execute and maintain that state. Here's how they interact:

The master node receives requests from users or external systems via the kube-apiserver.

Based on these requests, the master node makes scheduling decisions and updates the cluster state stored in etcd.

The kube-scheduler assigns pods to worker nodes, considering factors like resource availability and affinity.

The kubelet on each worker node executes the instructions received from the master node, managing containers and maintaining pod health.

kube-proxy on the worker nodes facilitates network communication between pods and external clients, following the network policies defined by Kubernetes.

In essence, the master node orchestrates the cluster's operation, while the worker nodes execute the workload, collectively ensuring the efficient deployment and management of containerized applications in Kubernetes.

Networking:

Container Network Interface (CNI) : Defines networking configuration for containers.

Pod Network : Facilitates communication between pods across nodes.

Plugin networks in Kubernetes are essential for enabling communication between pods across different nodes in the cluster. They abstract the networking details, facilitate container-to-container communication, and support features like service discovery, load balancing, and network policies. Popular plugin networks include Flannel, Calico, Cilium, and Weave Net, each offering unique features and capabilities to enhance networking within Kubernetes clusters.

Add-ons:

DNS : Provides DNS-based service discovery within the cluster.

Dashboard : Web-based user interface for cluster management.

Ingress Controller : Manages external access to services within the cluster.

Working with Kubernetes:

Deploying and managing applications in Kubernetes involves the following steps:

Manifest Creation : Define application configurations in YAML or JSON manifest files.

Cluster Configuration : Apply manifest files to the Kubernetes cluster to achieve the desired state.

Pod Management : Pods, the smallest unit in Kubernetes, run on nodes controlled by the master and host one or more containers.

Higher-level Objects : Utilize higher-level Kubernetes objects such as Deployments, Services, and Ingress for advanced management and networking.

Scaling and Replication : Use Replication Controllers or Replica Sets to ensure application scalability and fault tolerance.

Deployment and Rollback : Deploy applications using Deployment objects, allowing for controlled rollouts, updates, and rollbacks.

Networking : Leverage Kubernetes networking for communication between pods and external access to services.

Labels and Selectors : Organize and select Kubernetes objects using labels and selectors for efficient management.

Conclusion:

In conclusion, Kubernetes stands as a powerful platform for automating containerized application management, offering scalability, reliability, and flexibility across diverse environments. By mastering Kubernetes architecture and its core concepts, developers and operators can unlock the full potential of cloud-native computing, enabling rapid innovation and deployment of modern applications.

In the next parts of this series, we will delve deeper into advanced Kubernetes topics, including service mesh, persistent storage, security, and best practices for managing Kubernetes clusters in production environments. Stay tuned for more insights and practical guidance on navigating the Kubernetes ecosystem effectively.